|

I am a first-year PhD student in the Manchester NLP group, fortunately, supervised by Prof. Chenghua Lin. Before that, I interned at the Institute for Intelligent Computing (通义千问) of Alibaba (2024.2-2024.8). I obtained my bachelor's degree from Jiangnan University and my master's degree from Nanjing University of Science and Technology. My research interests lie at AIGC, Multimodal Model, information retrieval, and multimodal retrieval. Email / Google Scholar / Twitter / Github |

|

|

|

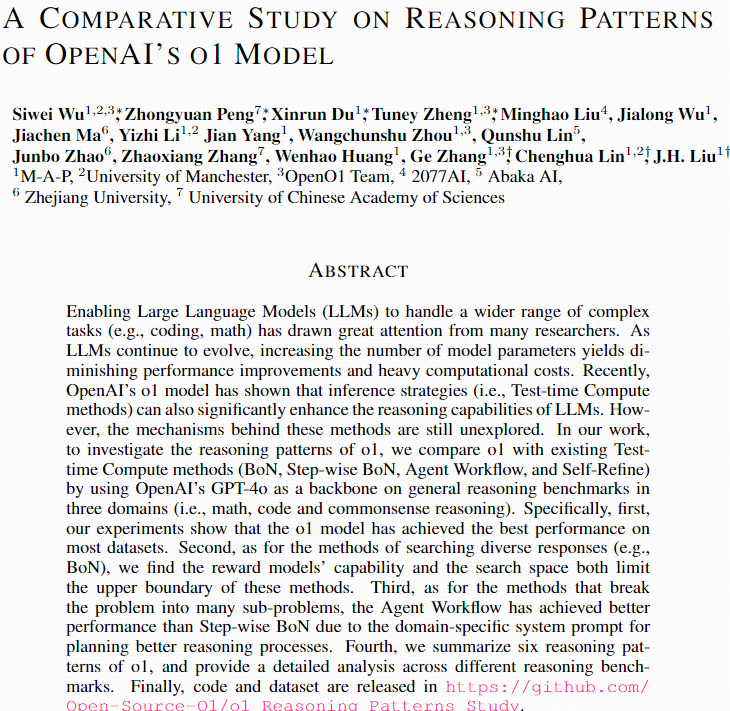

Siwei Wu, Zhongyuan Peng, Xinrun Du, Tuney Zheng, et al. Prepring Arxiv Paper / Code In our work, to investigate the reasoning patterns of o1, we compare o1 with existing Test-time Compute methods (BoN, Step-wise BoN, Agent Workflow, and Self-Refine) by using OpenAI's GPT-4o as a backbone on general reasoning benchmarks in three domains (i.e., math, code and commonsense reasoning). |

|

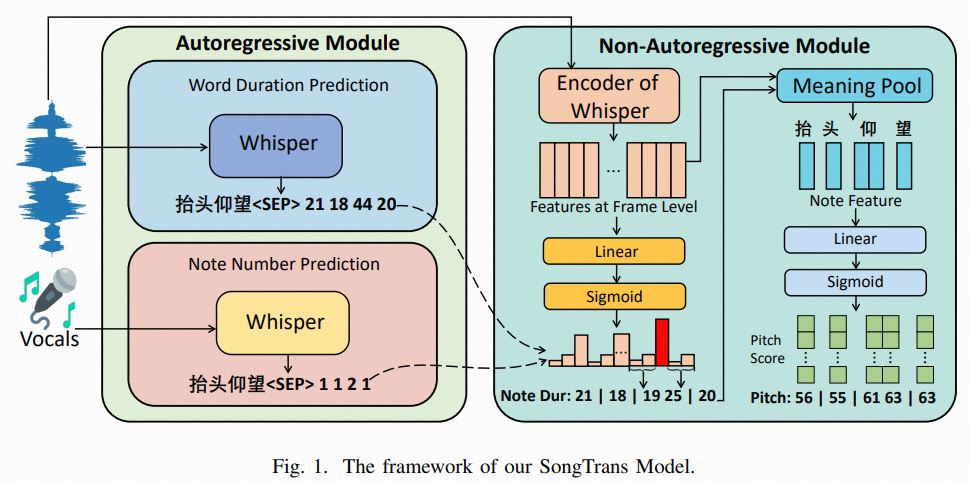

Siwei Wu, Jinzheng He, Ruibin Yuan, Haojie Wei, et al. Prepring Arxiv Paper we first design a pipeline by optimizing existing tools and annotating numerous lyric-note pairs of songs. Then, based on the annotated data, we train a unified SongTrans model that can directly transcribe lyrics and notes while aligning them simultaneously, without requiring pre-processing songs. |

|

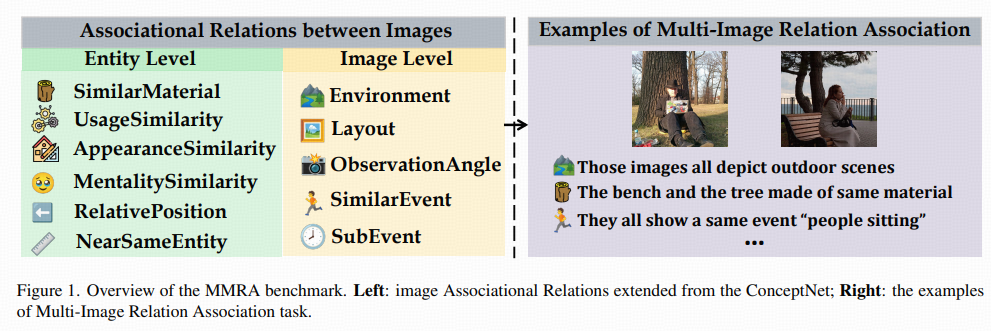

Siwei Wu, Kang Zhu, Yu Bai, Yiming Liang, et al. Prepring Arxiv Paper / Code We propose the multi-image relation association task and a meticulously curated Multi-granularity Multi-image Relational Association (MMRA) benchmark, comprising 1,024 samples. In order to systematically and comprehensively evaluate current LVLMs, we establish an associational relation system among images that contain 11 subtasks (e.g, UsageSimilarity, SubEvent, etc.) at two granularity levels (i.e., “image” and “entity”) according to the relations in ConceptNet. |

|

Siwei Wu, Yizhi Li, Kang Zhu, et al. ACL 2024 findings Paper / Code To bridge this information retrieval gap in the scientific domain, this work develops a specialised scientific MMIR (SciMMIR) benchmark by leveraging open-access paper collections to extract data relevant to the scientific domain. |

|

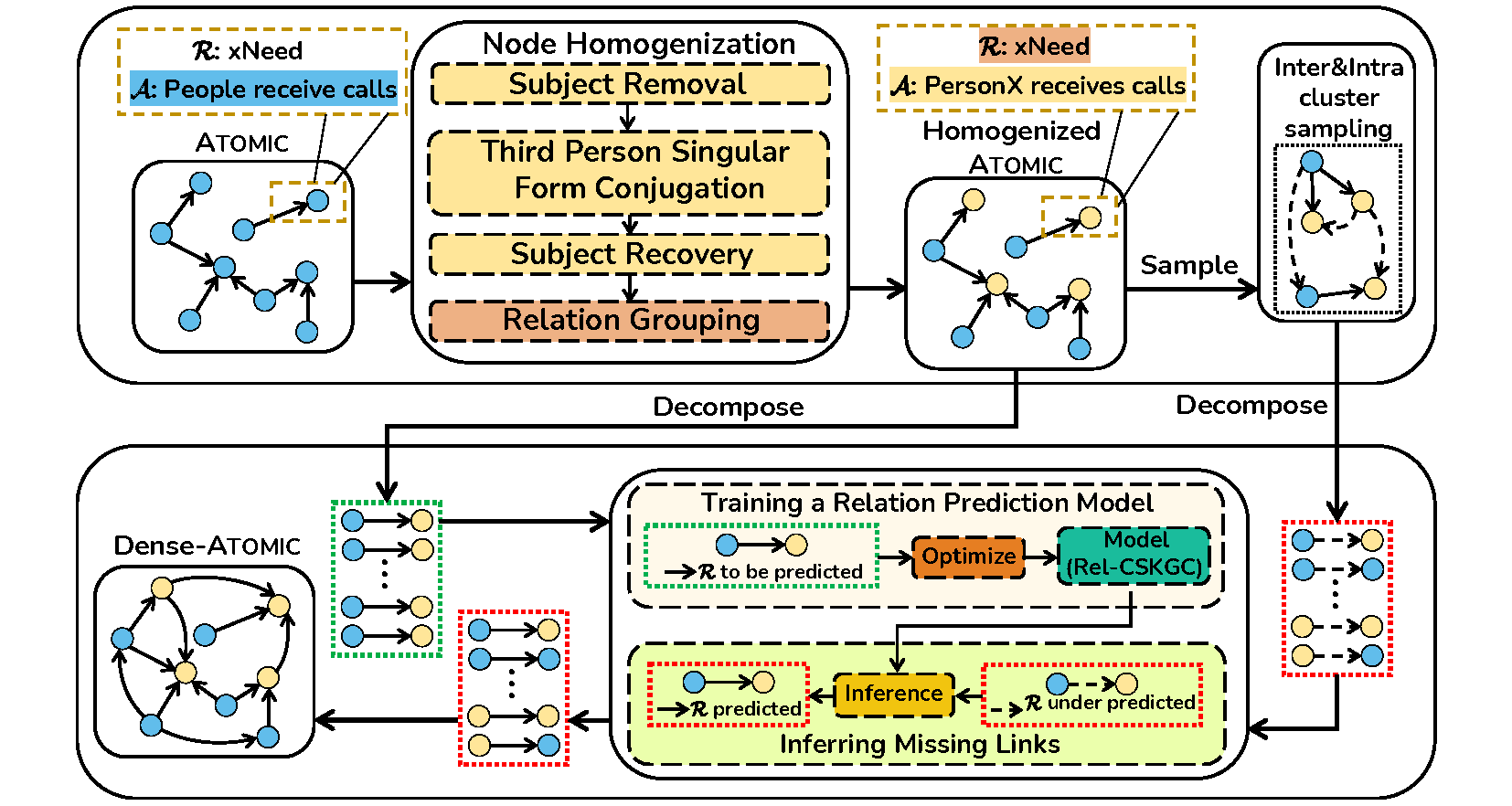

Xiangqing Shen, Siwei Wu, Rui Xia. ACL 2023 Paper / Code This work mainly solves the bipartite graph properties of commonsense knowledge graph ATOMIC, mines potential multiple paths in ATOMIC, and builds a more complete knowledge graph Dense-ATOMIC. |

|

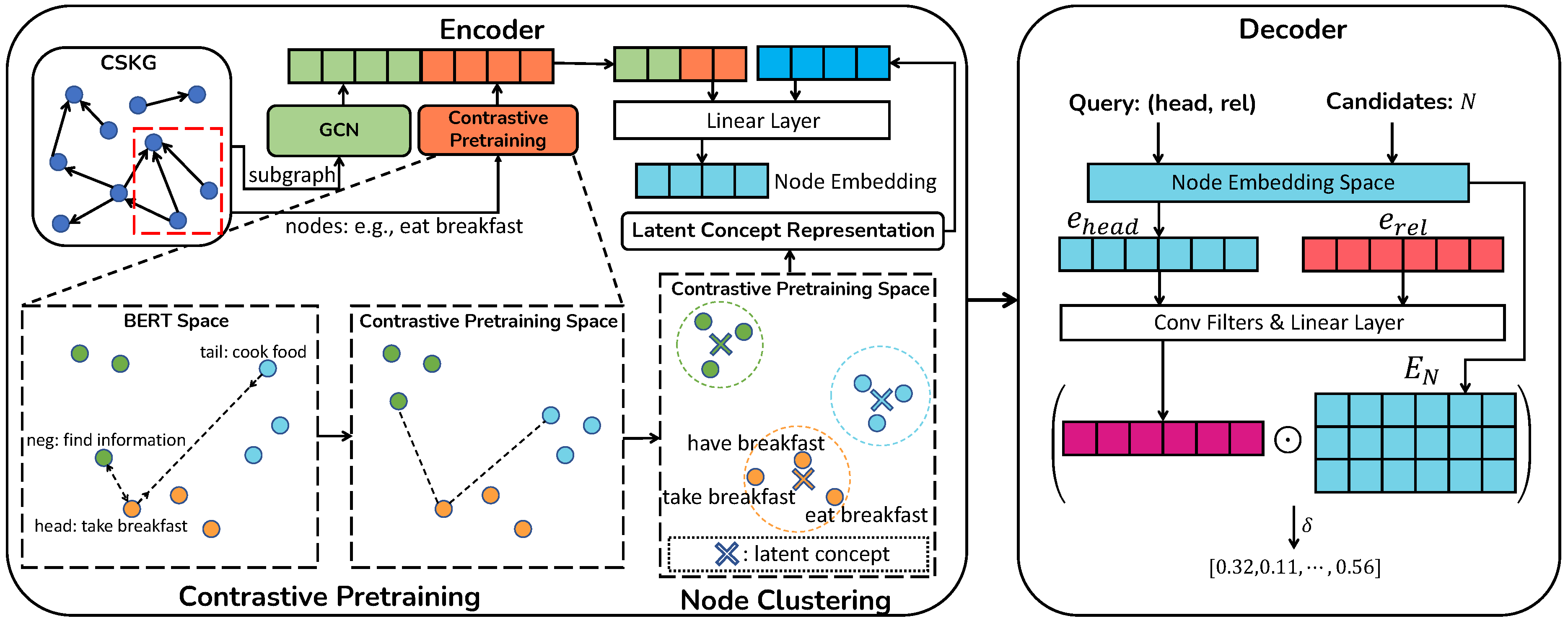

Siwei Wu, Xiangqing Shen, Rui Xia. ACL 2023 findings Paper / Code This work alleviates the problems of edge sparsity and nodes redundancy in the commonsense knowledge graph, and proposes a new commonsense knowledge graph completion framework. |

Selected Awards

National Encouragement Scholarship, Jiangnan University, 2018

Honorable Mentions of Mathematical Contest in Modeling, 2020

ACL 2023 Outstanding Paper Award

|

Last modified in Nov. 2022. Design and source code from Jon Barron.

|